In today’s rapidly evolving technological landscape, neural networks stand at the forefront of artificial intelligence, revolutionizing the way we approach complex problem-solving. This comprehensive guide aims to demystify neural networks, exploring their fundamental importance in AI, how they function, and their various applications across industries. As we delve into the challenges and future trends within the field, professionals will gain valuable insights and practical steps to effectively harness the power of neural networks in their projects.

Key Takeaways:

- Neural networks are foundational to the advances in artificial intelligence, significantly impacting various industries.

- Understanding the basic mechanics of neural networks is essential for leveraging their capabilities effectively.

- Different types of neural networks cater to specific applications, from image recognition to natural language processing.

- Despite their potential, neural networks come with challenges such as data requirement and interpretability issues.

- Staying abreast of future trends in neural networks will enable professionals to innovate and improve their AI projects.

Introduction to Neural Networks and Their Importance in AI

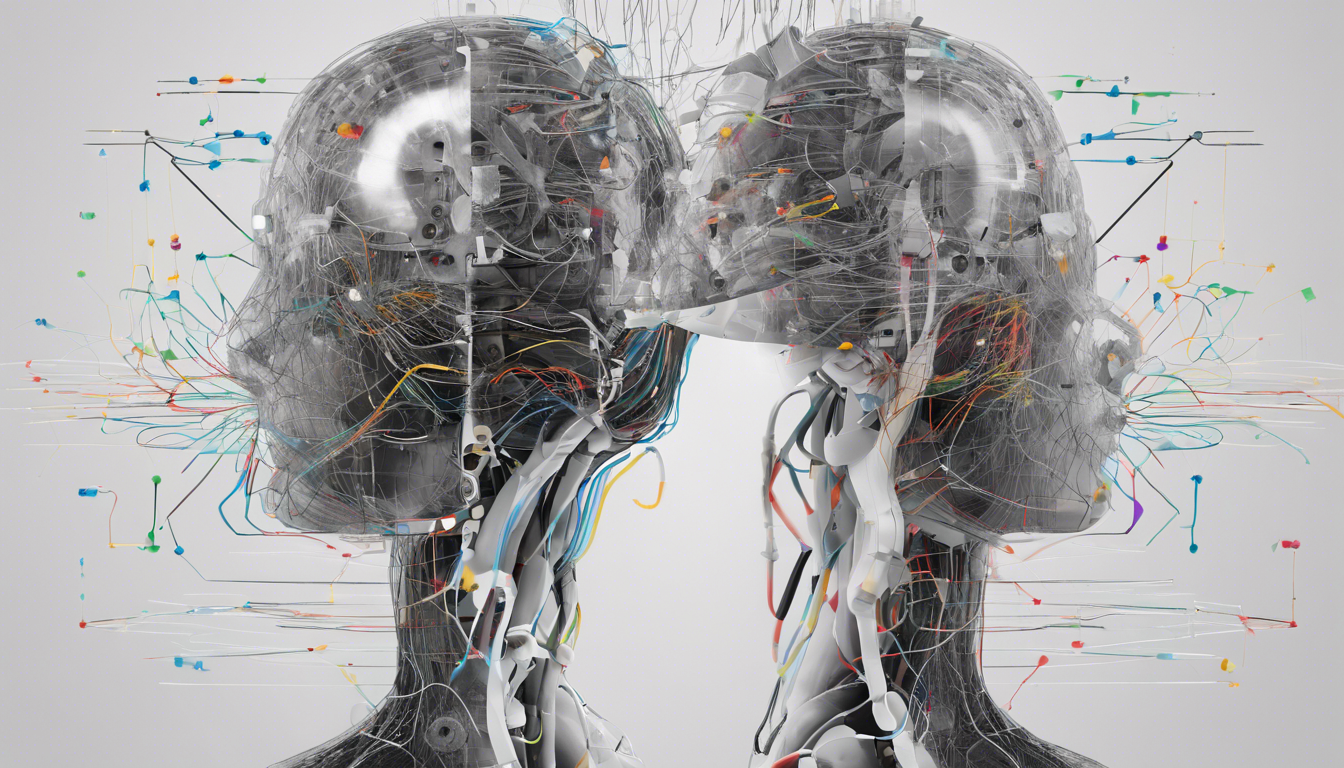

Neural networks, a pivotal component of artificial intelligence (AI), are computational models inspired by the human brain’s architecture and functionality. These networks are composed of interconnected nodes, or neurons, that process and analyze vast amounts of data through layers of abstraction. Their significance lies in their ability to learn from examples, adapting their parameters to recognize patterns and make predictions with impressive accuracy. In industries ranging from healthcare to finance, neural networks are being leveraged to enhance decision-making processes, automate complex tasks, and unlock insights from unstructured data. As AI continues to evolve, understanding the mechanics and potential applications of neural networks is essential for professionals aiming to harness their capabilities for innovative solutions in a data-driven world.

How Neural Networks Work: A Simplified Explanation

Neural networks, a subset of artificial intelligence, are computational models inspired by the human brain’s neural architecture, designed to recognize patterns and solve complex problems. These networks consist of layers of interconnected nodes or ‘neurons,’ where each connection has an associated weight that adjusts as learning proceeds. The network processes input data through layers: the input layer receives external information, hidden layers transform this data via weighted summations and activation functions, and the output layer produces the final predictions or classifications. During training, a method called backpropagation is utilized, wherein the model’s predictions are compared to the actual outcomes, and the weights are iteratively adjusted to minimize the error. This sophisticated yet systematic approach enables neural networks to excel in tasks such as image recognition, natural language processing, and even predictive analytics, making them invaluable tools across various domains including finance, healthcare, and technology.

‘The science of today is the technology of tomorrow.’ – Edward Teller

Different Types of Neural Networks and Their Applications

Neural networks, a cornerstone of deep learning, come in various architectures, each tailored for specific applications across diverse fields. Convolutional Neural Networks (CNNs) excel in image processing tasks through their ability to automatically extract features and patterns from visual data, making them the preferred choice for applications like facial recognition and autonomous driving. Recurrent Neural Networks (RNNs), on the other hand, are adept at handling sequential data, such as time series forecasting and natural language processing, due to their capacity to retain information from previous inputs in a sequence. For scenarios requiring both image and sequential analysis, architectures like Long Short-Term Memory (LSTM) networks provide a robust solution, enhancing performance in domains like video analysis and speech recognition. Additionally, Generative Adversarial Networks (GANs) present a revolutionary approach towards generating new data reminiscent of training datasets, which has found utility in creating realistic images and art. As the landscape of machine learning expands, understanding these various neural network types and their applications is essential for professionals aiming to leverage artificial intelligence in solving complex real-world problems.

Challenges and Limitations of Neural Networks

Neural networks, while offering significant advancements in artificial intelligence and machine learning, are not without their challenges and limitations. One prominent issue is their tendency to require extensive labeled data for training; inadequate datasets can lead to poor performance and overfitting, where the model excels on training data but fails to generalize to unseen instances. Additionally, neural networks often function as black boxes, making interpretation of their decisions difficult and raising concerns about accountability and transparency, particularly in critical areas like healthcare and finance. Furthermore, the computational resources needed for training deep neural networks can be substantial, necessitating advanced hardware and considerable time investment, which may not be feasible for all organizations. Lastly, issues related to robustness arise, as neural networks can be vulnerable to adversarial attacks, where slight alterations to input can lead to drastically incorrect outputs, posing risks in real-world applications.

Future Trends in Neural Networks and AI Development

As we look toward the future of artificial intelligence, the evolution of neural networks stands at the forefront of innovation and development. Expect to see advancements in hybrid neural network architectures that integrate spiking and deep learning models, enhancing computational efficiency and emulating more closely the human brain’s function. Furthermore, the advent of unsupervised and self-supervised learning paradigms is set to revolutionize how we train AI systems, significantly reducing the need for labeled data. The gradual shift towards explainable AI (XAI) will enable neural networks to not only make predictions but also provide insights into their decision-making processes, fostering greater transparency and trust among users. Additionally, with the rise of edge computing, we can anticipate an increased deployment of neural networks in mobile and IoT devices, optimizing real-time data processing capabilities while minimizing latency. These trends will not only shape the future landscape of neural networks but will also redefine their applications across industries, from healthcare to autonomous systems, further driving the impetus for intelligent automation.